AI Model Matches Radiologists’ Accuracy Identifying Breast Cancer in MRIs.

Researchers from NYU Langone Health aim to improve breast cancer diagnostics with a new AI model. Recently published in Science Translational Medicine, the study outlines a deep learning framework that predicts breast cancer from MRIs as accurately as board-certified radiologists. The research could help create a foundational framework for implementing AI-based cancer diagnostic models in clinical settings.

“Breast MRI exams are difficult and time-consuming to interpret, even for experienced radiologists. AI has tremendous potential in improving medical diagnosis, as it can learn from tens of thousands of exams. Using AI to assist radiologists can make the process more accurate, and provide a higher level of confidence in the results,”

said study senior author Krzysztof J. Geras, an assistant professor in the Department of Radiology at the NYU Grossman School of Medicine.

As a sensitive tool in breast cancer diagnostics, MRIs can help identify malignant lesions sometimes missed in mammograms and clinical applications.

Dynamic contrast-enhanced magnetic resonance imaging (DCE-MRI) is often used as a screening tool in high-risk patients and its applications are expanding. The tool can help doctors investigate potentially suspicious lesions or evaluate the extent of disease in newly diagnosed patients. This type of medical imaging can also help inform a doctor’s treatment plan, including whether to perform a biopsy or the extent of surgery needed. Both scenarios influence short- and long-term patient outcomes.

According to the researchers, MRIs have untapped potential in predicting disease pathology and achieving a better understanding of tumor biology. Developing AI models from large, well-annotated datasets could be key in refining the sensitivity of these scans and reducing unnecessary biopsies.

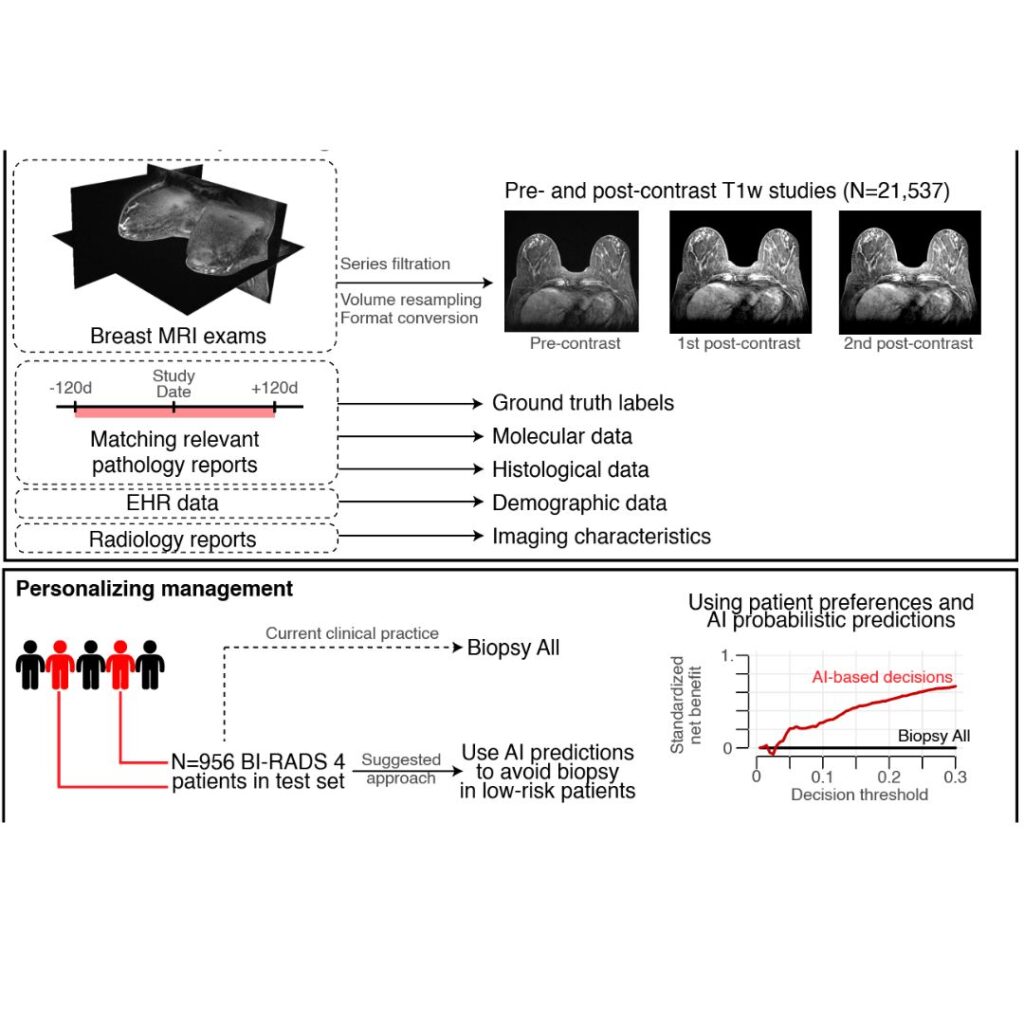

The researchers produced an AI model that improves the accuracy of breast cancer diagnosis using a dataset extracted from clinical exams performed at a NYU Langone Health breast imaging site. The dataset consisted of bilateral DCE-MRI studies from patients categorized as high-risk screening, preoperative planning, routine surveillance, or follow-up after a suspicious finding.

They trained an ensemble of deep neural networks with 3D convolutions for detecting spatiotemporal features using 14,198 labeled MRI examinations with mixed precision on the NVIDIA Apex open-source library. According to the team, the use of this library made it possible to increase the batch size during training.

The networks were trained using the cuDNN-accelerated PyTorch framework on the university’s HPC cluster equipped with 136 NVIDIA V100 GPUs and NVIDIA NVLink for scaling memory and performance.

Multi-GPU training was enhanced by the NVIDIA Collective Communication Library and training a single model took 12 days on average.

“The GPUs did all the heavy lifting for training with high-dimensional data as we used full MRI volumes,”

said Geras

The model performance was validated on a total of 3,936 MRIs from NYU Langone Health. Using three additional datasets from Duke University, Jagiellonian University Hospital in Poland, and the Cancer Genome Atlas Breast Invasive Carcinoma data collection, the team validated that the model can work across different populations and data sources.

The researchers compared the model results against five board-certified breast radiology attendings with 2 to 12 years of experience interpreting breast MRI exams. The clinicians interpreted 100 randomly selected MRI studies from the NYU Langone Health data.

The team found no statistical significance in the results between the radiologists and the AI system. Averaging the AI and radiologist predictions together increased overall accuracy by at least 5%, suggesting a hybrid approach may be most beneficial.

The model was also as accurate among patients with various subtypes of cancer, even among less common malignancies. Patient demographics, such as age and race, did not influence the AI system, despite the little training data available for some groups.

The model output can also be combined with the personal preference of a clinician or patient deciding whether to pursue a biopsy after a suspicious finding. By default, all suspicious lesions classified with category BI-RADS 4 are recommended for biopsy, which leads to a substantial number of false positives. The AI model predictions can help avoid benign biopsies in up to 20% of all BI-RADS category 4 patients.

The authors note that the work has a few limitations, including understanding how a hybrid approach could impact a radiologist’s decision in a hospital setting or how the model makes its predictions.

“Although we only looked at retrospective data in our study, the results are strong enough that we are confident in the accuracy of the model. We look forward to further translating this work into clinical practice, deploying our AI systems in real life, and improving breast cancer diagnostics for both doctors and patients,”

said study lead author Jan Witowski, a postdoctoral research fellow at NYU School of Medicine.

Source: NVIDIA